We are delighted to announce the release of Alibi Explain v0.8.0, featuring support for Partial Dependence plots, enabling global explainability of any model.

Partial Dependence plots—global insight into model behaviour

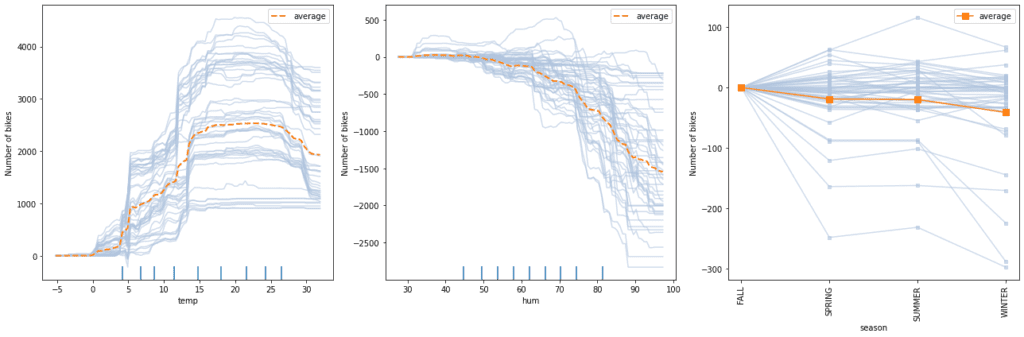

Partial Dependence (PD) plots are a popular method for visualising the marginal effects that one or two features have on the predicted outcome of a machine learning model. By inspecting PD plots, one can understand the relation between a feature/pair of features and the model response, helping practitioners understand the overall trend of the effect on predictions as feature values are varied. For example, Figure 1 shows that increasing temperature has a positive effect on the number of bike rentals predicted, up to a point of about ~17°C, where the prediction flattens until the weather becomes too hot (at about ~27°C) where predictions drop.

PD plots aggregate individual explanations called Individual Conditional Expectations (ICE) into a “global” view. Additionally, inspecting ICE plots on top of PD plots can help uncover heterogeneity between specific data points on the model response.

In Alibi Explain v0.8.0 we support partial dependence and individual conditional expectation for any black-box model via the new PartialDependence explainer class. We also provide a flexible convenience plotting function plot_pd for immediate inspection of the partial dependence and individual conditional expectation (ICE) plots. Furthermore, we also introduce a TreePartialDependence class to support a fast, recursive algorithm for some tree-based models (currently a subset of Scikit-learn models).

The Alibi Explain implementation of Partial Dependence provides many additional benefits over the implementation in the scikit-learn inspection module:

- Full support for black-box models, not just scikit-learn estimators

- Full support for categorical variables, including one-way categorical PD as well as two-way categorical-categorical and categorical-numerical PD

- Higher-level PD calculations supported (beyond one-way and two-way)

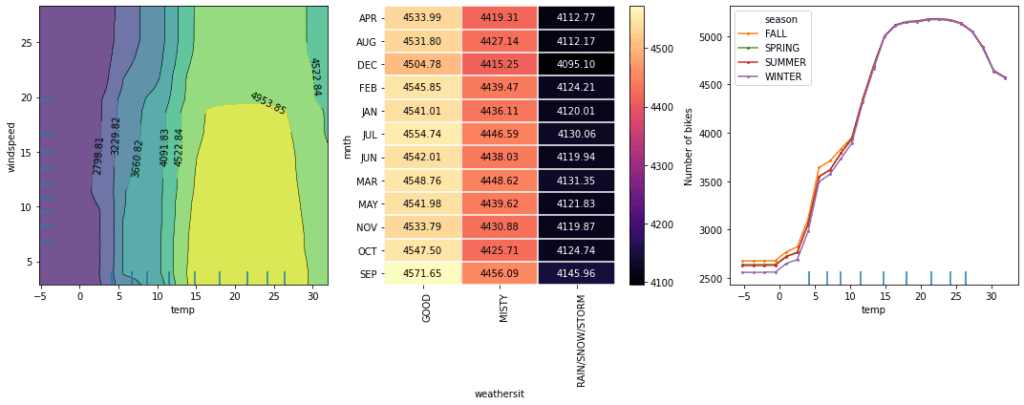

- Flexible plotting functionality of one-way and two-way PD (see Figure 2 for an example of 2-way PD plots)

Whilst Alibi Explain already supports one-way Accumulated Local Effects (ALE), which can be seen as an improvement on the assumptions made by PD plots, the inclusion of PD plots provides a versatile baseline of global explanations for any model. For more information on the usage and interpretation of PD plots please see our documentation.

Other improvements

As usual, with the v0.8.0 release, there are various improvements and bug fixes to the library. We have improved our documentation, particularly regarding the usage and interpretation of Anchor explanations. We have also refreshed our example notebooks, ensuring all examples run end-to-end with the latest dependency versions. Finally, we have fixed numerous bugs and made minor improvements to AnchorTabular, AnchorImage, ALE, CounterfactualProto, CounterfactualRL and TreeShap explainers.