One of the biggest blockers to leveraging neural networks across industries is that modern machine learning models are an algorithmic “black box”. Explainable AI is a relatively new area of research which allows organisations to confidently deploy models into production by providing a human-interpretable explanation for model outputs.

There were various methodologies and research papers on the topic, but no standard library that brought the best of these emerging XAI techniques together. This is why we released Alibi Explain in May 2019, and since then the data science team has been focused on including new state-of-the-art techniques from open research and our own R&D.

The Seldon data science team are delighted to announce v0.4.0 of our Alibi Explain model explainability library with many algorithm and code improvements as well as a new and more intuitive API.

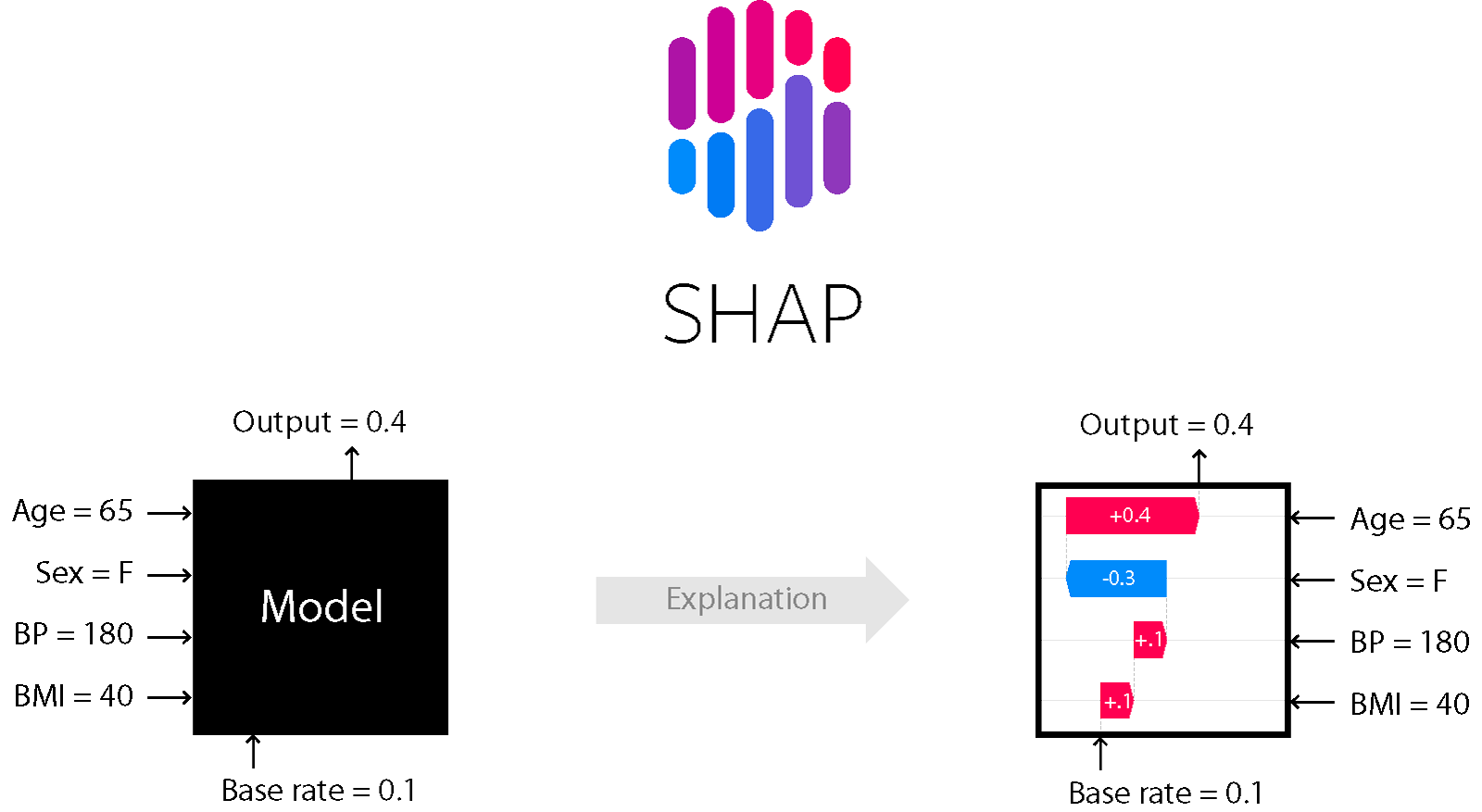

One of the most notable developments is our integration of Kernel SHAP for black-box model explanations. The Kernel SHAP (SHapley Additive exPlanations) algorithm is based on research into human-interpretable explanations of black-box models. The algorithm is largely for regression and classification models applied to tabular data, producing feature attributions to explain which features contributed positively or negatively to the resulting prediction (see diagram below).

Image credit: Scott Lundberg (https://github.com/slundberg/shap)

Our integration fully supports datasets with categorical variables and we include in-depth examples of how to produce and interpret the resulting explanations.

With our new and improved API, all algorithms now return a complete ‘Explanation’ object containing all necessary information in the attributes ‘meta’ and ‘data’. This all contributes to an altogether more intuitive and easy-to-use explanation tool. For deeper technical information, read the Kernel SHAP docs.

Alibi Explain is available under an Apache 2.0 license and we welcome contribution and input from our open-source community. Our community call on 2nd April will be focused on the Alibi libraries Explain and Detect – we’d love to chat with you if you’re using Alibi or want to learn more about it. Join our community Slack group for updates or get in touch to receive an invite to our community call.

At Seldon, we believe explanations play an essential role in the machine learning workflow within organisations. So we have integrated Alibi explanations into the model auditing components of our enterprise product Seldon Deploy – register here to get early access.