Alibi Detect v0.7.2 features 2 new powerful drift detectors, the Spot-the-diff and Learned Kernel detectors as well as a new online drift example on medical imaging data.

The novel Spot-the-diff drift detector is an extension of the existing Classifier detector where the classifier is specified in a manner that makes the detections interpretable and attributes the drift to individual features. The method is inspired by the work of Jitkrittum et al. (2016). This feature level feedback provides very valuable insights into the nature of changes in the underlying data.

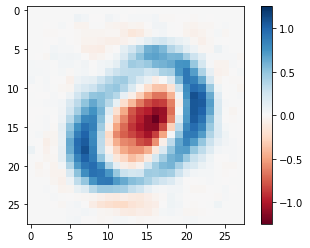

A simple example is illustrated in the image below, where the reference data consists of images of numbers 1 to 9 while the test data also contains the number 0. The pixel-wise drift attribution shows that the test instances were on average more similar to an instance with below average pixel values in the middle and above average values where a typical 0 takes shape. The Spot-the-diff detector also assigns scores to each instance indicating whether it thinks they belong to the reference or test data. As a result, the method allows for both feature and instance level drift attribution! Check out the example notebook for more detailed information.

The Learned Kernel drift detector (Liu et al., 2020) is an extension of the already integrated Maximum Mean Discrepancy detector where we use a portion of the data to train the (deep) kernel and maximise an estimate of the resulting drift detection test power. It is straightforward to plug in your kernel of choice which makes the method flexible for various use cases. A working example of the detector on images can be found in this notebook.

With the addition of the Spot-the-diff and Learned Kernel drift detectors, the detectors in Alibi Detect cover nearly all data modalities, from text or images to molecular graphs, both in online and offline settings. Alibi Detect also comes with built-in functionality to detect different types of drift such as covariate shift, prediction distribution drift or even model uncertainty drift which can be used as a proxy for model performance degradation. On top of that, various methods provide more granular feedback and show which features or instances are most responsible for the occurrence of drift. All drift detectors support both TensorFlow and PyTorch backends. Check the documentation and examples for more information!