As machine learning gains traction across industries, businesses are finding new and novel uses for the technology. However, as soon as your organisation moves beyond a handful of models, management and observability of this growing system can become a significant challenge.

Without proper monitoring, models can begin to degrade and this can have serious ramifications on the individual data science teams but also echo across your business. Fines, reputational damage and financial losses are all a distinct possibility when decisions are informed by ‘black box’ predictions and calculations. It’s estimated that it takes approximately nine months to prototype and deploy a new model, a significant up-front investment of resources, and model degradation will quickly eat into the returns.

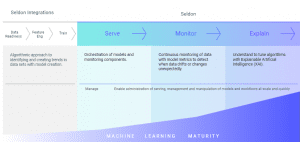

To help streamline development, deployment and monitoring, organisations are embracing MLOps in order to unlock their business potential. At Seldon, we have seen organisations move through what we call the ‘Machine Learning (ML) Maturity Model’ as they scale ML deployment across an organisation and apply more complex techniques to manage their scaling infrastructure. Over time, this has evolved into a 4-stage model that demonstrates the journey we have seen organisations go on to address the problems they come across when scaling their deployments. Once teams can move through all these stages, they are often at the point where they can start realising the full value of ML in their organisation.

So where does your organisation sit on the scale?

Serving Models

Theoretical models are interesting and informative, but to deliver a competitive edge, they must be integrated with other applications and data sources and served into production. However, as the number of models in use increases, the method for serving models needs to scale – particularly as mission-critical systems come to rely on the ML environment.

To avoid downtime and risk, ‘canaries’ and other techniques can be deployed to test new models. By funnelling a controlled variable of traffic, the model can be assessed to confirm optimal performance before being used to replace its predecessor. Alternatively, canaries can be tested using previous data to further minimise risk to operations.

So, the first step towards machine learning maturity is streamlining and orchestrating the way you serve models. Seldon Core has proven pedigree for this function – but more is required for true ML maturity.

Monitoring Models

Model drift is to be expected. Changes to the external environment and data will cause the model to drift, underperform and generate anomalous results. Monitoring to identify and mitigate this potential drift is an essential step towards maturity – and ensuring maximum value from your models.

There are three reasons you must build out a robust ML monitoring capability to avoid performance issues:

Feature Distribution Changes

Feature distributions may change compared to your training data. However, for time and expense reasons you cannot directly measure the deployed model performance so you must resort to proxies such as drift detectors on the input features, output distributions or model uncertainties.

Changing Relationships

You may also encounter changes in the relationship between features and target.

Individual Outliers

Poor model calibration and an over-confident model on out-of-distribution data can generate bad predictions when processing individual outliers. Models must be monitored to detect outliers and adversarial attacks to maintain the integrity of the model and its outputs.

Monitoring functionality must be well-integrated with your operations, allowing users to drill-down into instances and features to identify and understand poor performance. You should then be able to use a range of explainability techniques such as attribution methods, anchors and counterfactuals for deeper investigation.

There is no one-size-fits-all solution for monitoring; a maturing organisation will need to combine these techniques to develop an approach that suits their use case and the compliance restraints of their industry. We see organisations in highly-regulated industries such as healthcare and financial services moving through this stage far more quickly, as teams are forced to deal with the high expectations of their regulatory environment. Monitoring is also an iterative process, and many teams will loop back to refine their monitoring capabilities or approaches to deal with internal risk concerns or utilise new techniques.

To move through this stage, organisations will need to provide insight into data distributions and how they impact model performance often via indirect measures. Ultimately, providing a tool which combines this functionality in an intuitive yet truthful (to the actual model behaviour and underlying data distributions without oversimplifying things) way is where the added value is.

Explaining Models

Serving and monitoring models provides definite value, but you will also need to understand how they arrive at their conclusions. This is particularly true for highly regulated industries that must be able to explain the decision-making process and its impacts.

Developing an explainability capability ensures you have the right information at the right time so you can answer any questions or concerns raised by internal auditors and external regulators. Importantly, these bodies may not be asking for that data and metadata now, but could be needing logs from today in years time.

Businesses will avoid non-compliance fines through successful model governance. Developing audit trails for your models will deliver full transparency – and the ability to switch back to previous models if they were higher performing.

Mature ML deployments will integrate compliance capabilities such as those described to help keep models within risk requirements, protecting the business and improving outcomes.

Managing Models

Most IT systems use simplified administration and orchestration to reduce overheads and increase productivity – and ML deployments are no different. You will need to simplify management so that data scientists can focus on refining models rather than their administration.

The most mature deployments will interconnect model serving, monitoring and explainability, allowing operators to see the bigger picture and holistic trends. They use model management, monitoring and alerting tools to give developers a faster feedback loop, allowing for faster iterations – and time to ROI.

By managing machine learning frameworks effectively, organisations are able to realise up to 30% faster growth with AI – and 50% higher profit margins. So where is your business on the MLOps Maturity Model? And which tools do you need to help your ML capabilities mature and realise the bigger picture?

Operationalise Machine Learning for Every Organisation

Seldon moves machine learning from POC to production to scale, reducing time-to-value so models can get to work up to 85% quicker. In this rapidly changing environment, Seldon can give you the edge you need to supercharge your performance.

With Seldon Deploy, your business can efficiently manage and monitor machine learning, minimise risk, and understand how machine learning models impact decisions and business processes. Meaning you know your team has done its due diligence in creating a more equitable system while boosting performance.

Deploy machine learning in your organisations effectively and efficiently. Talk to our team about machine learning solutions today.