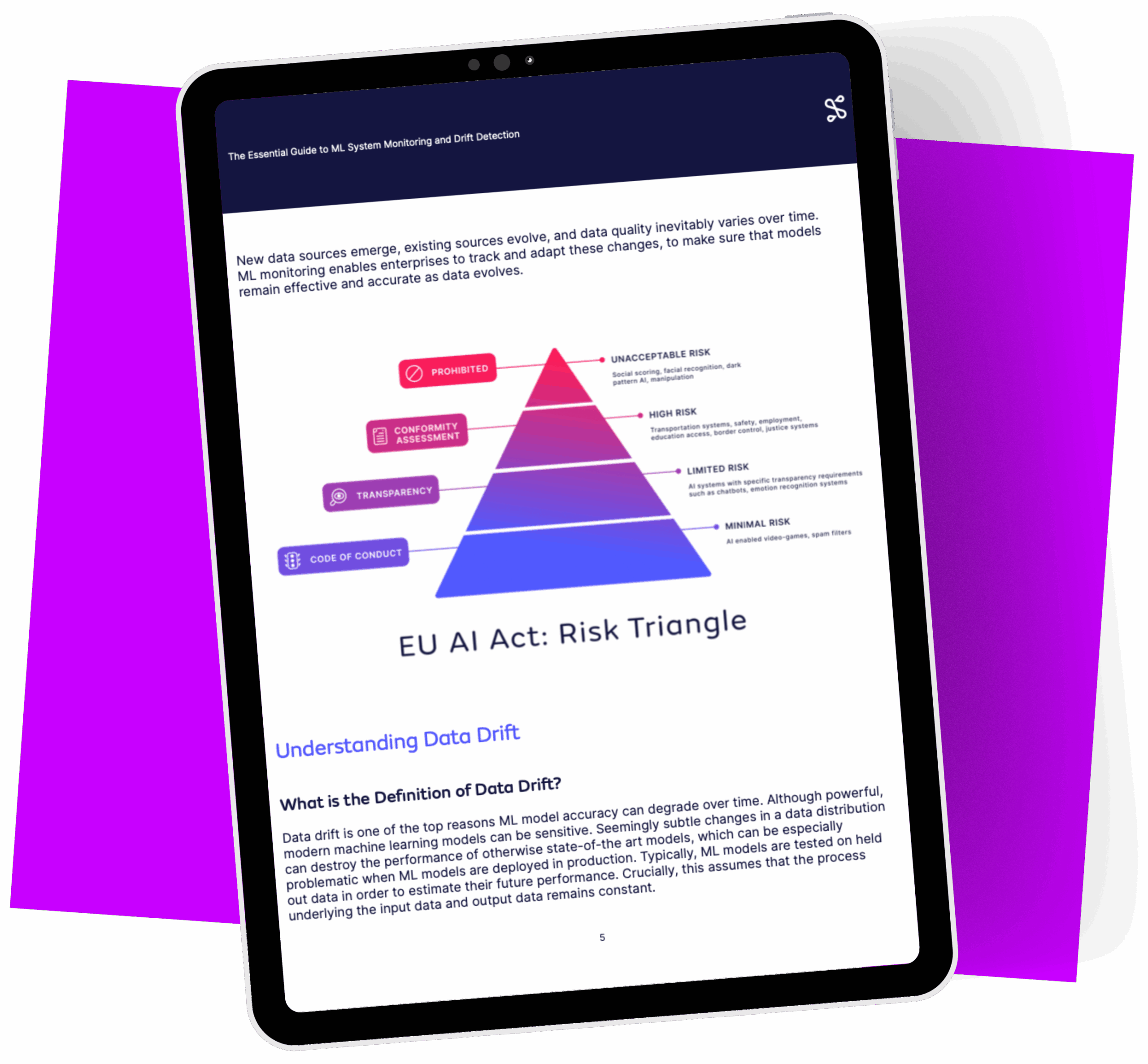

Building Trust in Production ML: A Complete Guide to Observability with Seldon

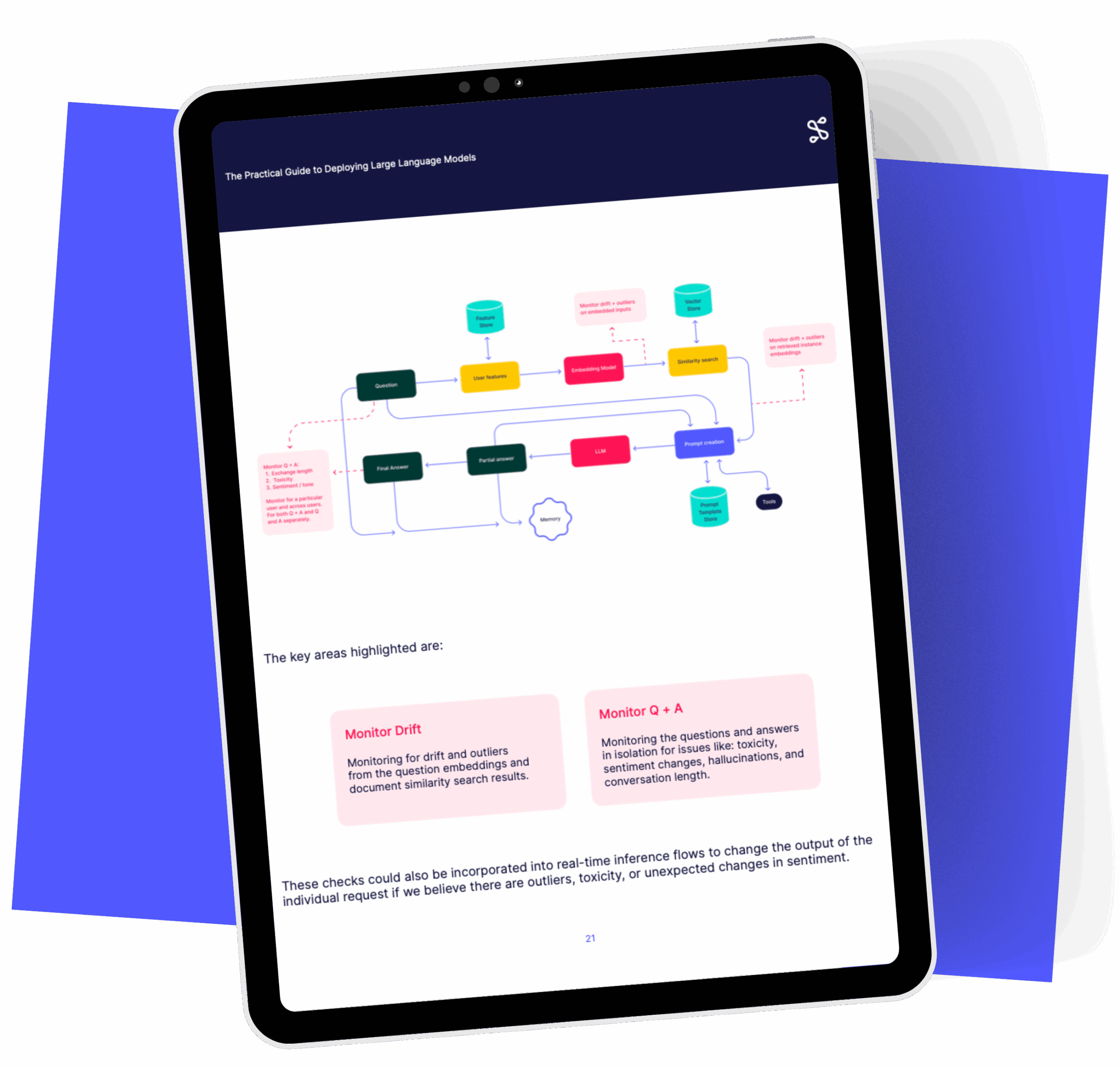

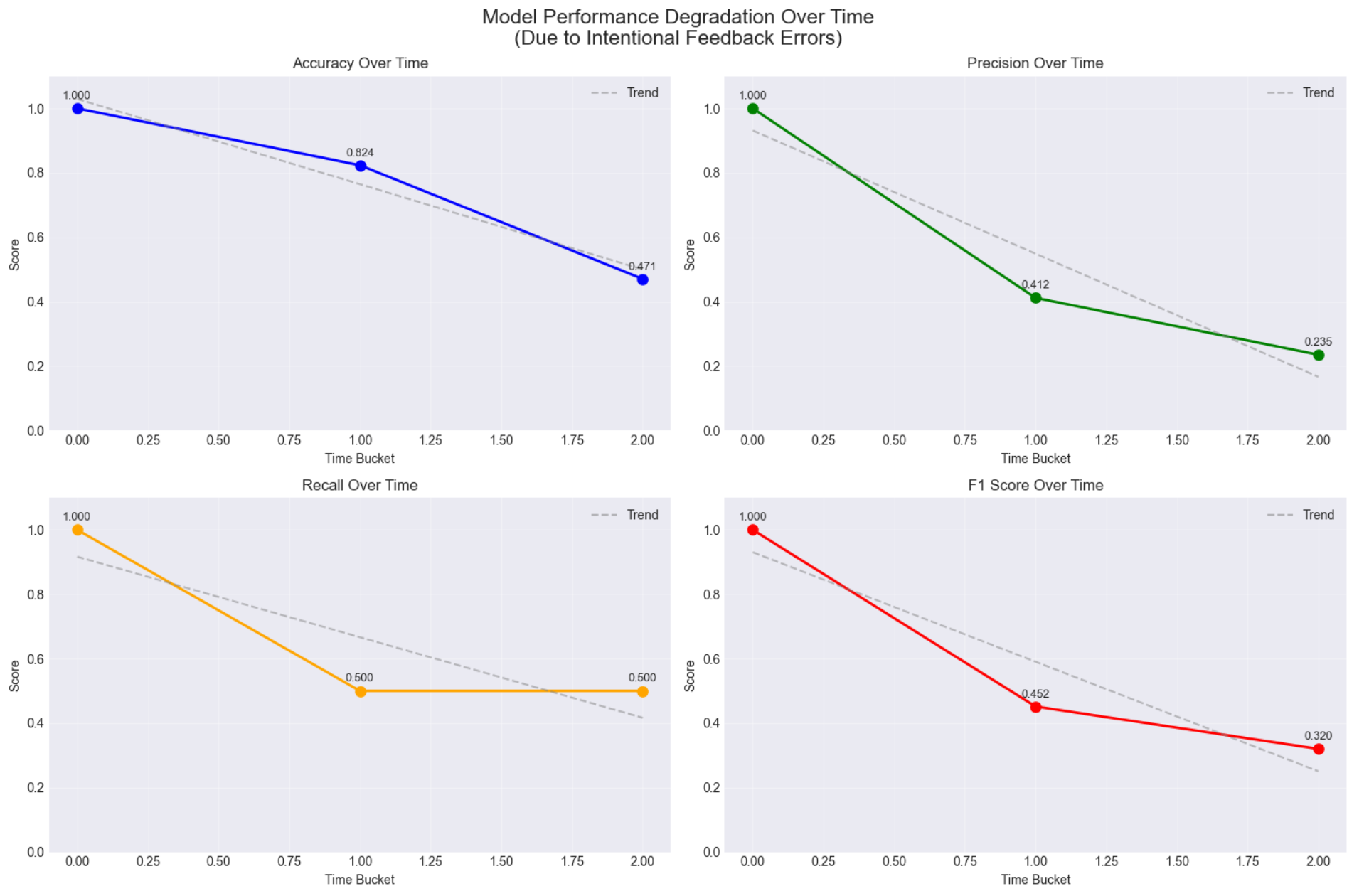

How to deploy, monitor, explain, and continuously improve traditional machine learning use cases in production with confidence. The Hidden Challenge of traditional ML in Production