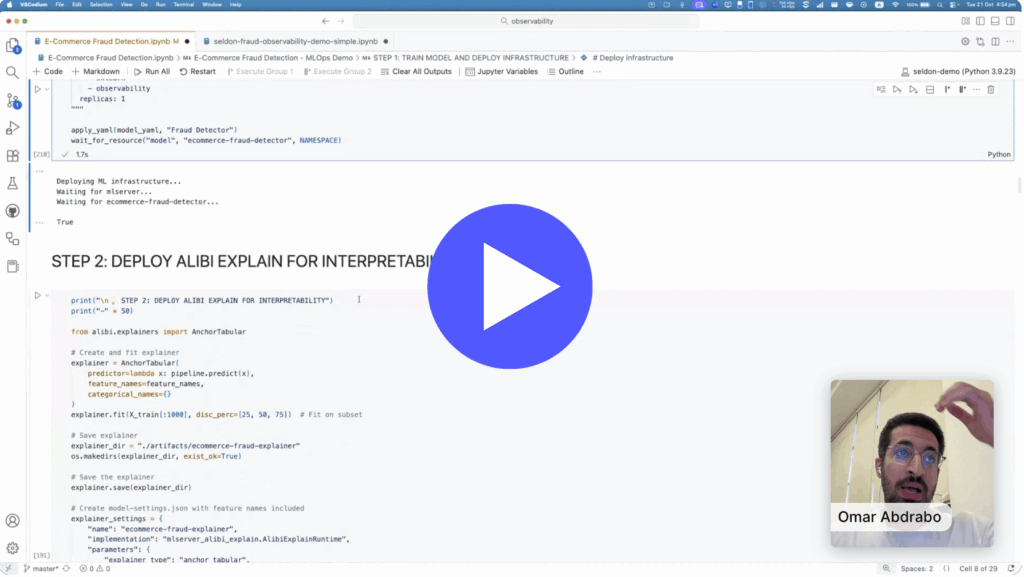

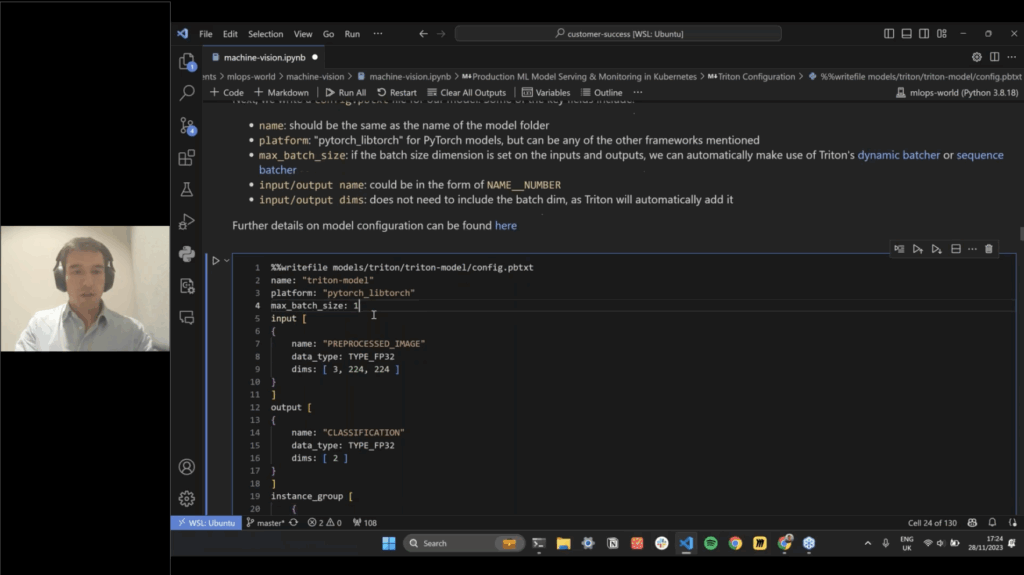

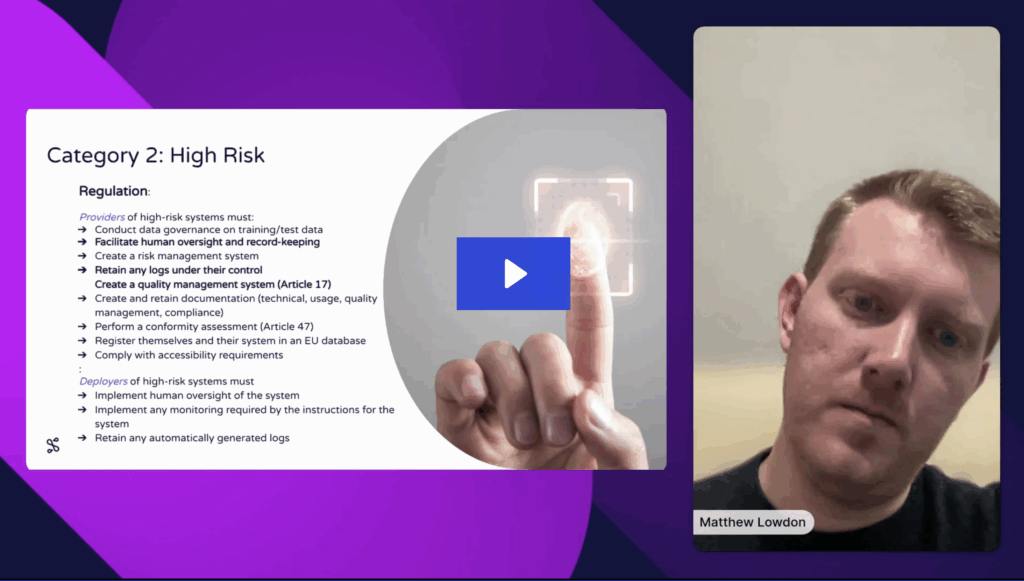

In this developer-focused session, walk through a wide variety of model deployment strategies – from classic ML pipelines to embedded deployments, model-as-a-service, and edge use cases. Cover foundational differences between ML and AI, the evolving role of LLMs, and how to handle real-world challenges like A/B testing, shadow deployments, and online inference. This talk is packed with technical examples, YAML snippets, and live walkthroughs that demonstrate the flexibility of Seldon Core and MLServer.

About

Learnings

-

Batch, Online & Streaming Inference: Understand the trade-offs between batch predictions, real-time scoring, and continuous stream processing.

-

Embedded Model Deployment: Deploy models directly inside web apps, mobile devices, and edge environments using tools like Gradio, CoreML, and ONNX.

-

Model-as-a-Service (MaaS): Serve models via APIs and pipelines using MLServer, including multi-step orchestration for audio transcription, translation, and summarization.

-

Pipeline Deployment with Core 2: Chain multiple models together in Kubernetes using simple YAML specs, with support for advanced routing and configuration.

-

AB Testing & Canary Deployments: Dynamically split traffic between model versions with built-in statistical testing and safe rollback options.

-

Shadow Deployments & Monitoring: Evaluate models live without user impact, capturing predictions for offline comparison and debugging.

-

Multi-Armed Bandits: Automatically route more traffic to better-performing models over time using reward-based learning logic.

-

LLM Deployment at Scale: Serve large language models using transformer-based backends like VLLM, DeepSpeed, Triton, and integrate with prompt management and GPU optimization.

-

Use Cases Across Environments: Support for hybrid, cloud, and on-prem setups across finance, health, energy, and edge AI deployments.

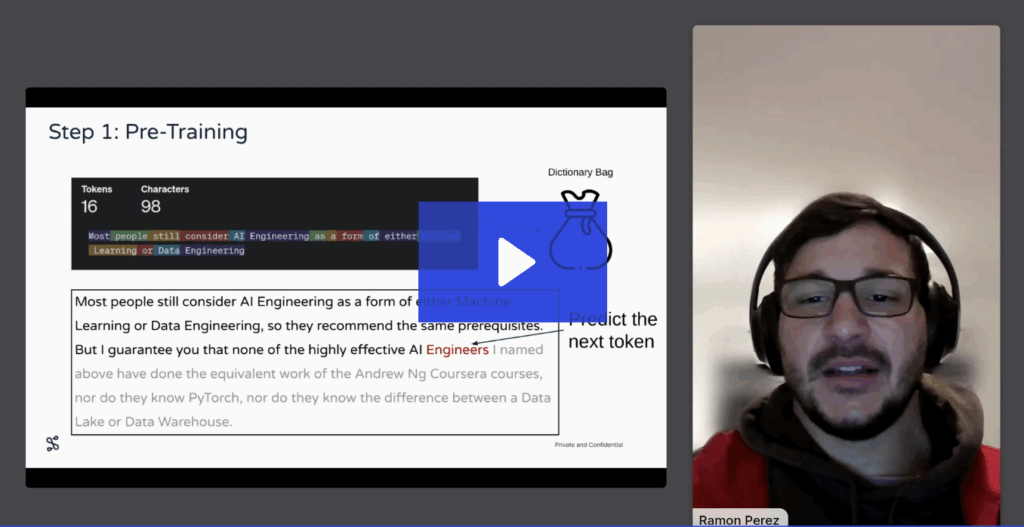

Ramon Perez

Ramon Perez has spent the past nine years working across the data spectrum spanning data analysis, building dashboards, developing data platforms, and creating numerous machine learning models. Beyond his technical work, Ramon is passionate about sharing knowledge, having taught and presented at universities, companies, conferences, and MeetUp events. When he’s not immersed in data, you’ll likely find him mountain biking, exploring the outdoors, or discovering new coffee spots and eateries.

Complex, real-time use cases is what we do best

Talk with a expert to explore how Seldon can support more streamlined deployments for real-time, complex projects like fraud detection, personalization, and so much more.

MORE RECENT WEBINARS & EVENTS

Stay Ahead in MLOps with our

Monthly Newsletter!

Join over 25,000 MLOps professionals with Seldon’s MLOps Monthly Newsletter. Your source for industry insights, practical tips, and cutting-edge innovations to keep you informed and inspired. You can opt out anytime with just one click.

✅ Thank you! Your email has been submitted.