Optimize Models

Maximize Outcomes+

Model Performance Metrics (MPM) Module on top of Core+ advances your data science evaluation capabilities to optimize production classification & regression models with model quality insights.

Book a Demo

Discuss your ML use cases and challenges with an expert for a tailored deep dive into how Seldon can support your goals.

FREE DOWNLOAD

MPM Module Product Overview

A comprehensive overview of Seldon’s Model Performance Metrics (MPM) Module from architectural insights, key features, and use cases.

Get to know Seldon's MPM Module

Give your team real-time visibility into model accuracy and reliability, helping them detect hidden degradation, maintain compliance, and build stakeholder trust in production AI.

Adapt Quickly

Stay ahead of business and regulatory demands by monitoring both classification and regression models in changing environments and data patterns

Consistency & Trust

Unify how performance is measured and tracked across models, use cases, and deployments

Root-Cause Analysis

Perform root-cause analysis through Jupyter that provide clear insights using model quality data

Reduce Risk

Reduce model degradation risk and business impact by proactively detecting and responding to dips in model quality

Adapt Quickly

Stay ahead of business and regulatory demands by monitoring both classification and regression models in changing environments, and data patterns

Consistency & Trust

Unify how performance is measured and tracked across models, use cases, and deployments

Root-Cause Analysis

Through Jupyter notebooks and reporting with dashboards that provide clear insights using model quality data

Reduce Risk

Reduce model degradation risk and business impact by proactively detecting and responding to dips in model quality

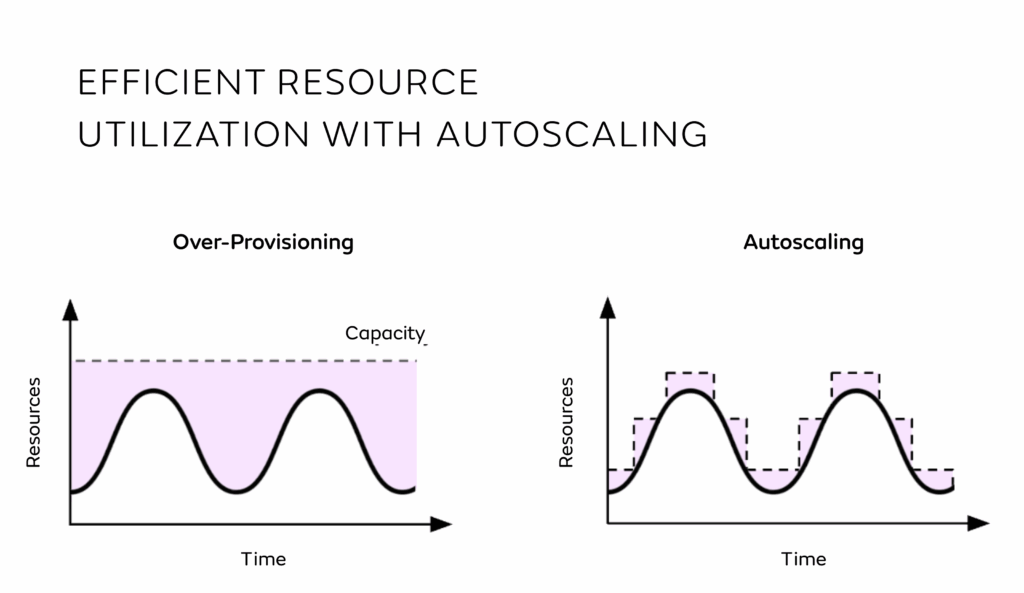

Deploy and Scale Real-Time Applications

Flexible to fit any system or requirement with built-in standardization, observability, and cost optimization, it ensures real-time predictions and efficient operations across any environment.

MPM Features That Fuel

Success+

Simplify complexity. Scale with confidence.

Comprehensive Metric Coverage

A complete evaluation toolkit for classification and regression consistency.

Feedback Storage & Linking

Link feedback to predictions for auditable insights and use APIs to slice metrics by model, pipeline, or time.

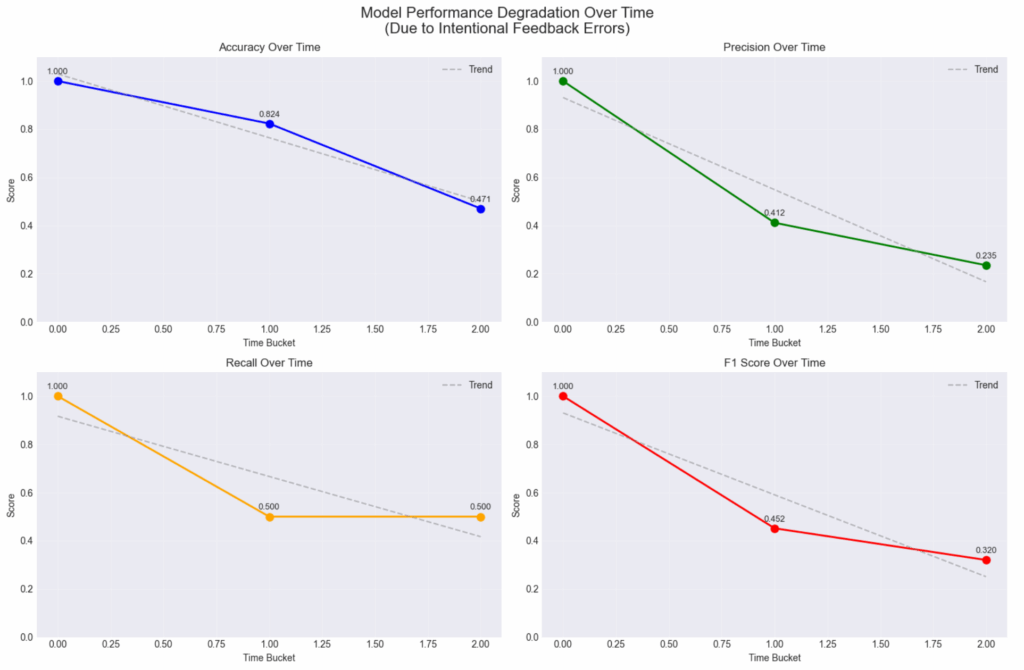

Time-Based Trend Analysis

Track performance shifts in real time with rolling windows, from seconds to hours, ideal for spotting spikes and anomalies.

Model Quality Dashboards

Jumpstart with ready-made Grafana and Jupyter dashboards, or build your own with full API access to every metric.

MPM Features That Fuel

Success+

Comprehensive Metric Coverage

A complete evaluation toolkit for classification, regression, and multi-class consistency.

Feedback Storage & Linking

Link feedback to predictions for auditable insights and use APIs to slice metrics by model, pipeline, or time.

Time-Based Trend Analysis

Track performance shifts in real time with rolling windows, from seconds to hours, ideal for spotting spikes and anomalies.

Model Quality Dashboards

Jumpstart with ready-made Grafana and Jupyter dashboards, or build your own with full API access to every metric.

Deploy.

Monitor.

Improve+

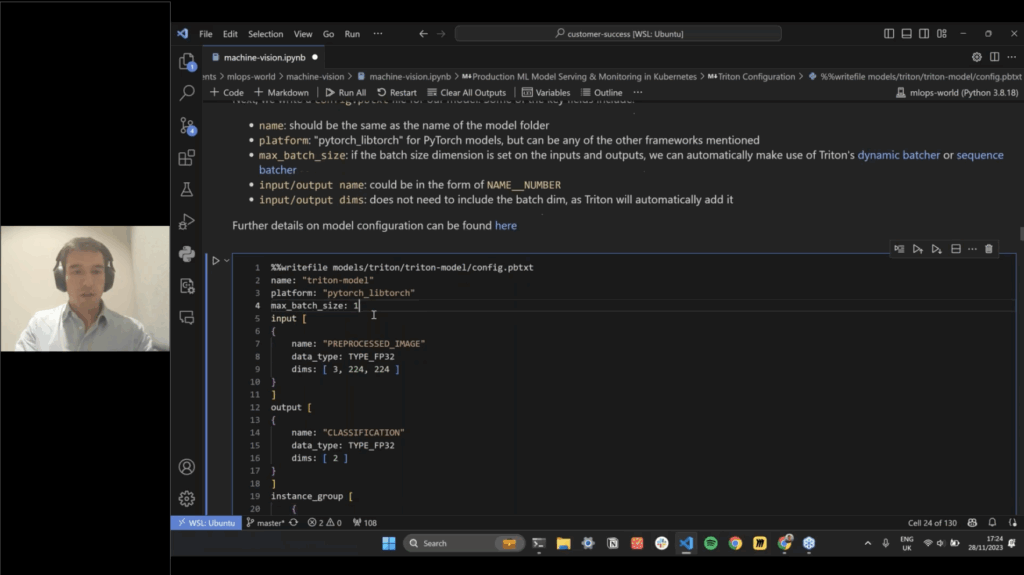

MPM Module leverages the microservice-based architecture of Core 2, enabling a tight coupling between live inference, feedback ingestion, and real-time insight delivery without disrupting model quality or deployment workflows.

Control Plane Services

Manage metric subscriptions, schema configurations, and pipeline associations such that you can declaratively define what is monitored and how it should be analyzed

Data Plane Services

Expose real-time metrics through REST APIs, with support for high-throughput querying, time-based bucketing, and namespace or model-level filtering

Deploy.

Monitor.

Improve+

MPM Module leverages the microservice-based architecture of Core 2, enabling a tight coupling between live inference, feedback ingestion, and real-time insight delivery without disrupting model quality or deployment workflows.

Control Plane Services

Manage metric subscriptions, schema configurations, and pipeline associations such that you can declaratively define what is monitored and how it should be analyzed

Data Plane Services

Expose real-time metrics through REST APIs, with support for high-throughput querying, time-based bucketing, and namespace or model-level filtering

Get to know

Core+

Our business is your success. Stay ahead with accelerator programs, certifications, hands-on support with our in-house experts for maximum innovation.

Accelerator Programs

Tailored recommendations to optimize, improve, and scale through bespoke, data-driven suggestions.

Hands-on Support

A dedicated Success Manager who can support your team from integration to innovation.

SLAs

Don't wait for answers with clear SLAs, customer portals, and more.

Seldon IQ

Customized enablement, workshops, and certifications.

Get to know

Core+

Our business is your success. Stay ahead with accelerator programs, certifications, hands-on support with our in-house experts for maximum innovation.

Accelerator Programs

Tailored recommendations to optimize, improve, and scale through bespoke, data-driven suggestions.

Hands-on Support

A dedicated Success Manager who can support your team from integration to innovation.

SLAs

Don't wait for answers with clear SLAs, customer portals, and more.

Seldon IQ

Customized enablement, workshops, and certifications.

Proven Use Cases

A few ways organizations use Seldon’s Model Performance Metrics (MPM) to validate models, ensure compliance, and monitor business-critical predictions in production.

Deployed models often face changing real-world data, making it hard to know if predictions remain accurate and trustworthy. Seldon’s Model Performance Metrics (MPM) Module solves this by continuously comparing live predictions with actual outcomes. This real-time validation quickly flags drift, bias, or degradation, giving teams confidence that models stay reliable and compliant.

Build confidence in production models

Detect underperforming or biased models early

Demonstrate compliance with audit-ready monitoring

Take proactive action to reduce risk and improve outcomes

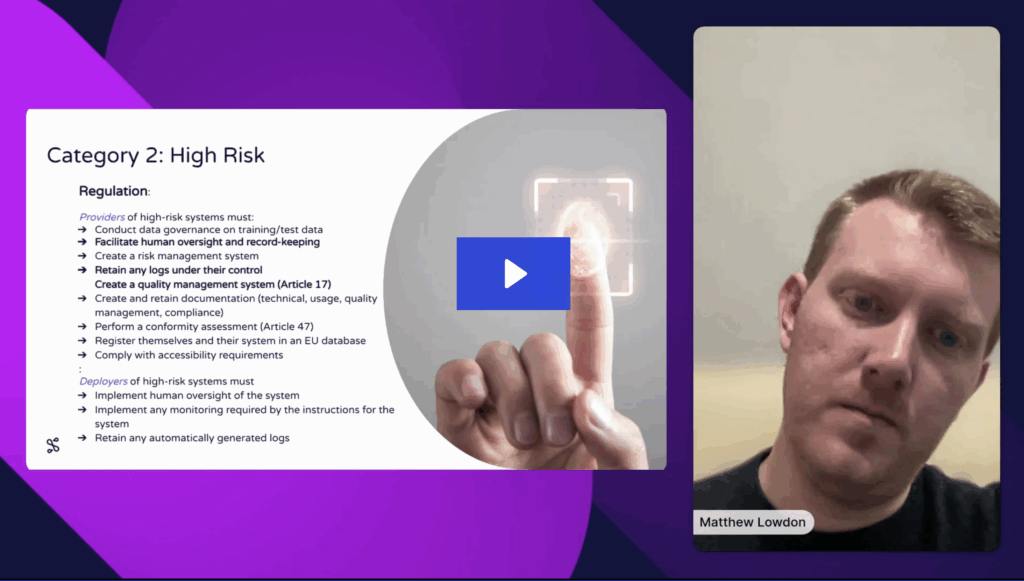

Regulated industries need transparency and accountability for AI decisions, but most ML systems lack built-in audit trails. Seldon’s Model Performance Metrics (MPM) Module solves this by capturing decision data, explanations, and outcomes in real time. This ensures every prediction is traceable, auditable, and ready for regulatory review.

Maintain audit-ready records for regulated AI decisions

Provide full traceability and accountability across models

Demonstrate compliance with clear, transparent monitoring

Reduce risk of regulatory fines or reputational damage

Regression models drive key business decisions, from predicting risks to scoring quality. But even small changes in their accuracy can have major downstream impacts. With Seldon’s Model Performance Metrics (MPM) Module, organizations can continuously monitor regression outputs, detect anomalies early, and ensure critical predictions remain stable and reliable in production.

Identify unexpected changes in regression model performance

Detect anomalies before they impact business operations

Maintain trust in business-critical predictions

Reduce downtime and operational risk through proactive monitoring

Proven Use Cases

Deployed models often face changing real-world data, making it hard to know if predictions remain accurate and trustworthy. Seldon’s Model Performance Metrics (MPM) solves this by continuously comparing live predictions with actual outcomes. This real-time validation quickly flags drift, bias, or degradation, giving teams confidence that models stay reliable and compliant.

Build confidence in production models

Detect underperforming or biased models early

Demonstrate compliance with audit-ready monitoring

Take proactive action to reduce risk and improve outcomes

Regulated industries need transparency and accountability for AI decisions, but most ML systems lack built-in audit trails. Seldon’s Model Performance Metrics (MPM) module solves this by capturing decision data, explanations, and outcomes in real time. This ensures every prediction is traceable, auditable, and ready for regulatory review.

Maintain audit-ready records for regulated AI decisions

Provide full traceability and accountability across models

Demonstrate compliance with clear, transparent monitoring

Reduce risk of regulatory fines or reputational damage

Regression models drive key business decisions, from predicting risks to scoring quality. But even small changes in their accuracy can have major downstream impacts. With Seldon’s Model Performance Metrics (MPM) module, organizations can continuously monitor regression outputs, detect anomalies early, and ensure critical predictions remain stable and reliable in production.

Identify unexpected changes in regression model performance

Detect anomalies before they impact business operations

Maintain trust in business-critical predictions

Reduce downtime and operational risk through proactive monitoring

Turn Complexity into Clarity

Let our team will show you how the Model Performance Metrics (MPM) Module works with the Seldon Ecosystem making it simple to innovate while maintaining compliance, reduce risk, and build trust in your AI systems.

DEMO

Turn Complexity into Clarity

Let our team will show you how the Model Performance Metrics (MPM) module makes it simple to ensure compliance, reduce risk, and build trust in your AI systems.

FREE DOWNLOAD

The Essential Guide to ML System Monitoring and Drift Detection

Learn when and how to monitor ML systems, detect data drift, and overall best practices, plus insights from Seldon customers on future challenges.

FREE DOWNLOAD

The Essential Guide to ML System Monitoring and Drift Detection

CONTINUED LEARNING

Dive deeper into topics like monitoring, deployment, AI, and more.

CONTINUED LEARNING

Become a GenAI expert with our recent LLMOps demos, blogs, and on-demand webinars.

Stay Ahead in MLOps with our

Monthly Newsletter!

Join over 25,000 MLOps professionals with Seldon’s MLOps Monthly Newsletter. Opt out anytime with just one click.

✅ Thank you! Your email has been submitted.

Stay Ahead in MLOps with our

Monthly Newsletter!

✅ Thank you! Your email has been submitted.