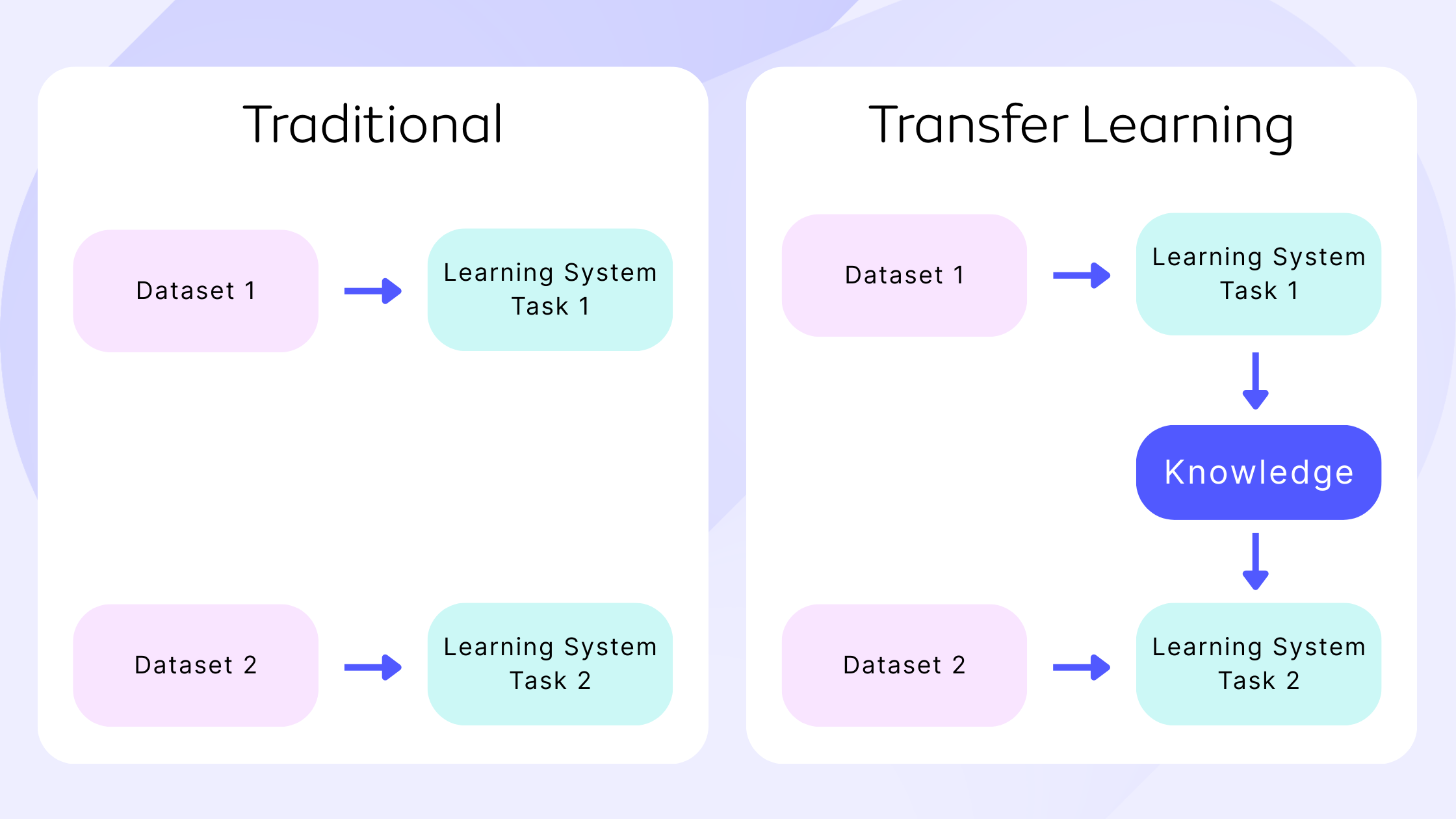

Transfer learning for machine learning is when elements of a pre-trained model are reused in a new machine learning model. If the two models are developed to perform similar tasks, then generalized knowledge can be shared between them. This approach to machine learning development reduces the resources and amount of labelled data required to train new models. It is becoming an important part of the evolution of machine learning and is increasingly used as a technique within the development process.

Machine learning is becoming an integral part of the modern world. Machine learning algorithms are being used to complete complex tasks in a range of industries. Examples include refining marketing campaigns for a better return on investment, improving network efficiency, and driving the evolution of speech recognition software. Transfer learning will play an important role in the continued development of these models.

There are a range of different types of machine learning, but one of the most popular processes is supervised machine learning. This type of machine learning uses labelled training data to train models. It takes expertise to correctly label datasets, and the process of training machines is often resource-intensive and time-consuming. Transfer learning is a solution to these issues, and for this reason, is becoming an important technique in the machine learning landscape.

This guide explores transfer learning for machine learning, including what it is, how it works, and why it is used.

What is transfer learning?

Transfer learning for machine learning is when existing models are reused to solve a new challenge or problem. Transfer learning is not a distinct type of machine learning algorithm, instead it’s a technique or method used whilst training models. The knowledge developed from previous training is recycled to help perform a new task. The new task will be related in some way to the previously trained task, which could be to categorize objects in a specific file type. The original trained model usually requires a high level of generalization to adapt to the new unseen data.

Transfer learning means that training won’t need to be restarted from scratch for every new task. Training new machine learning models can be resource-intensive, so transfer learning saves both resources and time. The accurate labelling of large datasets also takes a huge amount of time. The majority of data encountered by organizations can often be unlabelled, especially with the extensive datasets required to train a machine learning algorithm. With transfer learning, a model can be trained on an available labelled dataset, then be applied to a similar task that may involve unlabelled data.

What is transfer learning used for?

Transfer learning for machine learning is often used when the training of a system to solve a new task would take a huge amount of resources. The process takes relevant parts of an existing machine learning model and applies it to solve a new but similar problem. A key part of transfer learning is generalization. This means that only knowledge that can be used by another model in different scenarios or conditions is transferred. Instead of models being rigidly tied to a training dataset, models used in transfer learning will be more generalized. Models developed in this way can be utilized in changing conditions and with different datasets.

An example is the use of transfer learning with the categorization of images. A machine learning model can be trained with labelled data to identify and categorize the subject of images. The model can then be adapted and reused to identify another specific subject within a set of images through transfer learning. The general elements of the model will stay the same, saving resources. This could be the parts of the model that identifies the edge of an object in an image. The transfer of this knowledge saves retraining a new model to achieve the same result.

Transfer learning is generally used:

- To save time and resources from having to train multiple machine learning models from scratch to complete similar tasks.

- As an efficiency saving in areas of machine learning that require high amounts of resources such as image categorization or natural language processing.

- To negate a lack of labelled training data held by an organization, by using pre-trained models.

How does transfer learning work?

Transfer learning means taking the relevant parts of a pre-trained machine learning model and applying it to a new but similar problem. This will usually be the core information for the model to function, with new aspects added to the model to solve a specific task. Programmers will need to identify which areas of the model are relevant to the new task, and which parts will need to be retrained. For example, a new model may keep the processes that allow the machine to identify objects or data, but retrain the model to identify a different specific object.

A machine learning model which identifies a certain subject within a set of images is a prime candidate for transfer learning. The bulk of the model which deals with how to recognize different subjects can be kept. The part of the algorithm which highlights a specific subject to categorize is the element that will be retrained. In this case, there’s no need to rebuild and retrain a machine learning algorithm from scratch.

In supervised machine learning, models are trained to complete specific tasks from labelled data during the development process. Input and desired output are clearly mapped and fed to the algorithm. The model can then apply the learned trends and pattern recognition to new data. Models developed in this way will be highly accurate when solving tasks in the same environment as its training data. It will become much less accurate if the conditions or environment changes in real-world application beyond the training data. The need for a new model based on new training data may be required, even if the tasks are similar.

Transfer learning is a technique to help solve this problem. As a concept, it works by transferring as much knowledge as possible from an existing model to a new model designed for a similar task. For example, transferring the more general aspects of a model which make up the main processes for completing a task. This could be the process behind how objects or images are being identified or categorized. Extra layers of more specific knowledge can then be added to the new model, allowing it to perform its task in new environments.

Benefits of transfer learning for machine learning

Transfer learning brings a range of benefits to the development process of machine learning models. The main benefits of transfer learning include the saving of resources and improved efficiency when training new models. It can also help with training models when only unlabelled datasets are available, as the bulk of the model will be pre-trained.

The main benefits of transfer learning for machine learning include:

- Removing the need for a large set of labelled training data for every new model.

- Improving the efficiency of machine learning development and deployment for multiple models.

- A more generalized approach to machine problem solving, leveraging different algorithms to solve new challenges.

- Models can be trained within simulations instead of real-world environments.

Saving on training data

A huge range of data is usually required to accurately train a machine learning algorithm. Labelled training data takes time, effort and expertise to create. Transfer learning cuts down on the training data required for new machine learning models, as most of the model is already previously trained.

In many cases, large sets of labelled data are unavailable to organizations. Transfer learning means models can be trained on available labelled datasets, then applied to similar data that’s unlabelled.

Efficiently train multiple models

Machine learning models designed to complete complex tasks can take a long time to properly train. Transfer learning means organizations don’t have to start from scratch each time a similar model is required. The resources and time put into training a machine learning algorithm can be shared across different models. The whole training process is made more efficient by reusing elements of an algorithm and transferring the knowledge already held by a model.

Leverage knowledge to solve new challenges

Supervised machine learning is one of the most popular types of machine learning today. The approach creates highly accurate algorithms that are trained to complete specific tasks using labeled training data. However once deployed, performance may suffer if the data or environment stray from the training data. Transfer learning means knowledge can be leveraged from existing models instead of starting from scratch each time.

Transfer learning helps developers take a blended approach from different models to fine-tune a solution to a specific problem. The sharing of knowledge between two different models can result in a much more accurate and powerful model. The approach allows for the building models in an iterative way.

Simulated training to prepare for real-world tasks

Transfer learning is a key element of any machine learning process which includes simulated training. For models that need to be trained in real-world environments and scenarios, digital simulations are a less expensive or time-consuming option. Simulations can be created to mirror real-life environments and actions. Models can be trained to interact with objects in a simulated environment.

Simulated environments are increasingly used for reinforcement machine learning models. In this case, models are being trained to perform tasks in different scenarios, interacting with objects and environments. For example, simulation is already a key step in the development of self-driving systems for cars. Initial training of a model in a real-world environment could prove dangerous and time-consuming. The more generalized parts of the model can be built using simulations before being transferred to a model for real-world training.

Examples of transfer learning for machine learning

Although an emerging technique, transfer learning is already being utilized in a range of fields within machine learning. Whether strengthening natural language processing or computer vision, transfer learning already has a range of real-world usage.

Examples of the areas of machine learning that utilize transfer learning include:

- Natural language processing

- Computer vision

- Neural networks

Transfer learning in natural language processing

Natural language processing is the ability of a system to understand and analyze human language, whether through audio or text files. It’s an important part of improving how humans and systems interact. Natural language processing is intrinsic to everyday services like voice assistants, speech recognition software, automated captions, translations, and language contextualization tools.

Transfer learning is used in a range of ways to strengthen machine learning models that deal with natural language processing. Examples include simultaneously training a model to detect different elements of language, or embedding pre-trained layers which understand specific dialects or vocabulary.

Transfer learning can also be used to adapt models across different languages. Aspects of models trained and refined based on the English language can be adapted for similar languages or tasks. Digitized English language resources are very common, so models can be trained on a large dataset before elements are transferred to a model for a new language.

Transfer learning in computer vision

Computer vision is the ability of systems to understand and take meaning from visual formats such as videos or images. Machine learning algorithms are trained on huge collections of images to be able to recognize and categorize image subjects. Transfer learning in this case will take the reusable aspects of a computer vision algorithm and apply it to a new model.

Transfer learning can take the accurate models produced from large training datasets and help apply it to smaller sets of images. This includes transferring the more general aspects of the model, such as the process for identifying the edges of objects in images. The more specific layer of the model which deals with identifying types of objects or shapes can then be trained. The model’s parameters will need to be refined and optimized, but the core functionality of the model will have been set through transfer learning.

Transfer learning in neural networks

Artificial neural networks are an important aspect of deep learning, an area of machine learning attempting to simulate and replicate the functions of the human brain. The training of neural networks takes a huge amount of resources because of the complexity of the models. Transfer learning is used to make the process more efficient and lower the resource demand.

Any transferable knowledge or features can be moved between networks to streamline the development of new models. The application of knowledge across different tasks or environments is an important part of building such a network. Transferred learning will usually be limited to general processes or tasks which stay viable in different environments.

The future of transfer learning

The future of machine learning relies on widespread access to powerful models by different organizations and businesses. To revolutionize businesses and processes, machine learning needs to be accessible and adaptable to organization’s distinct local needs and requirements. Only a minority of organizations will actually have the expertise or the resources to label data and train a model.

The main difficulty is in obtaining large volumes of labelled data for the supervised machine learning process. The process of labelling data can be extremely labour-intensive, especially over large arrays of data. The need for large labeled data is prohibitive to the widespread development of the most powerful models.

Algorithms are likely to be developed centrally by organizations with access and resources to the huge array of labeled data required. But when these models are deployed by other organizations, performance can be impacted as each environment may be slightly different to the one the model was trained for. In practice, performance may be impacted by the deployment of even the most highly accurate models. This may prove to be a barrier to machine learning products and solutions moving into mainstream use.

Transfer learning will play a key role in solving this issue. Techniques in transfer learning will mean powerful machine learning models developed at scale can be adapted for specific tasks and environments. Transfer learning will be a key driver for the distribution of machine learning models across new areas and industries.

Real-Time Deployment at Scale, Managed Your Way

Seldon moves machine learning from POC to production to scale, reducing time-to-value so models can get to work up to 85% quicker. In this rapidly changing environment, Seldon can give you the edge you need to supercharge your performance.

With Seldon, your business can efficiently manage and monitor machine learning, minimize risk, and understand how machine learning models impact decisions and business processes. Meaning you know your team has done its due diligence in creating a more equitable system while boosting performance.

Talk to our team about machine learning solutions today –>