Imagine this scenario:

Your company has invested significantly in AI models designed to detect fraud, improve customer interactions, or anticipate maintenance needs. Initially, the excitement is palpable, with promises of transformative business outcomes. But soon enough, things slow down. Your models are making decisions, but why those decisions are made, and whether they’re truly optimal, remains a mystery. Welcome to the black-box reality of model-centric MLOps.

“Improving the data is not a preprocessing step that you do once. It’s part of the iterative process of model deployment.”

Andrew NG, Founder of DeepLearning AI

It all feels like common sense, but what does this look like in practice and how does shifting to a data-centric approach change the game?

Why the Model-Centric Approach is Holding You Back

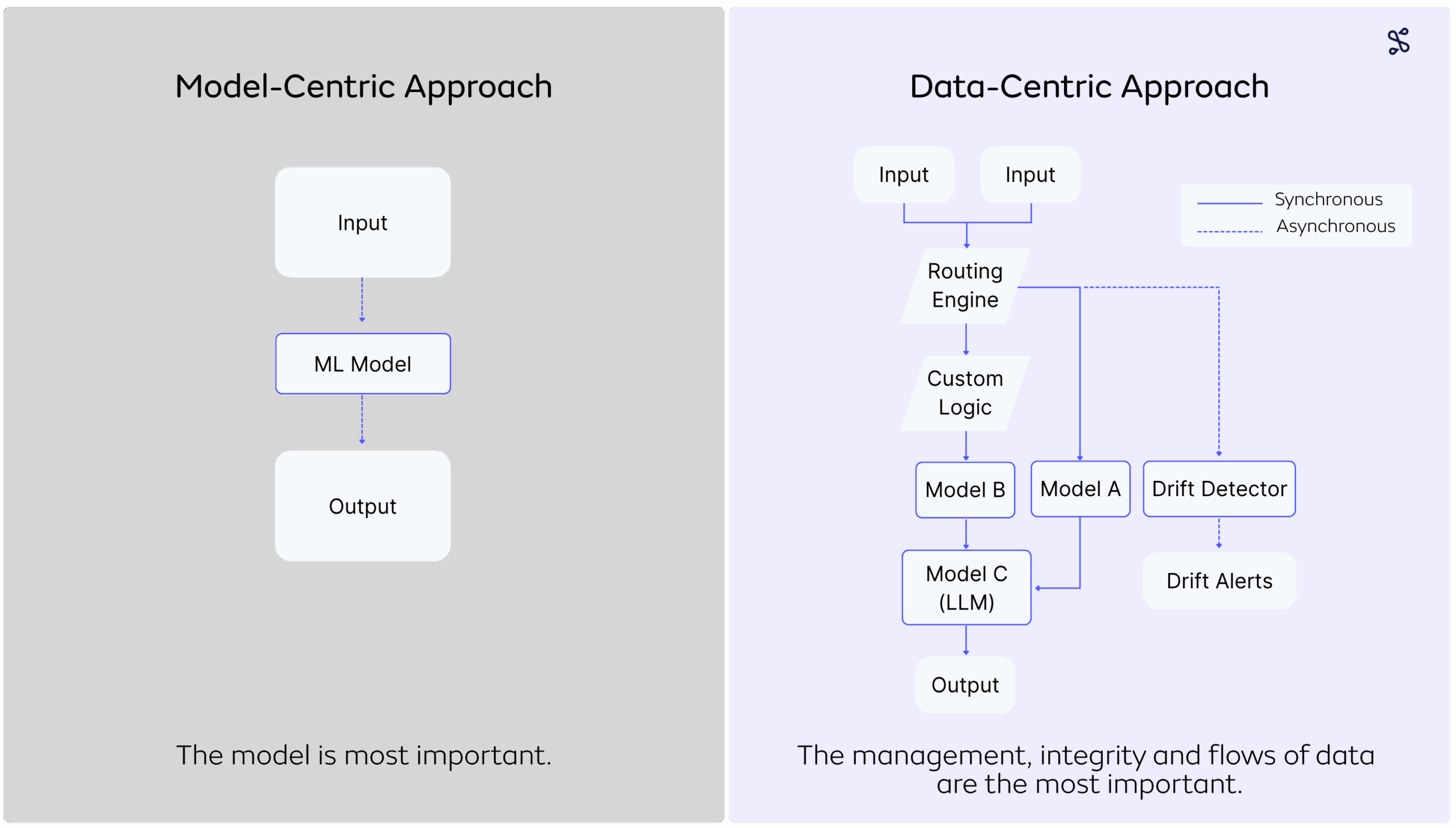

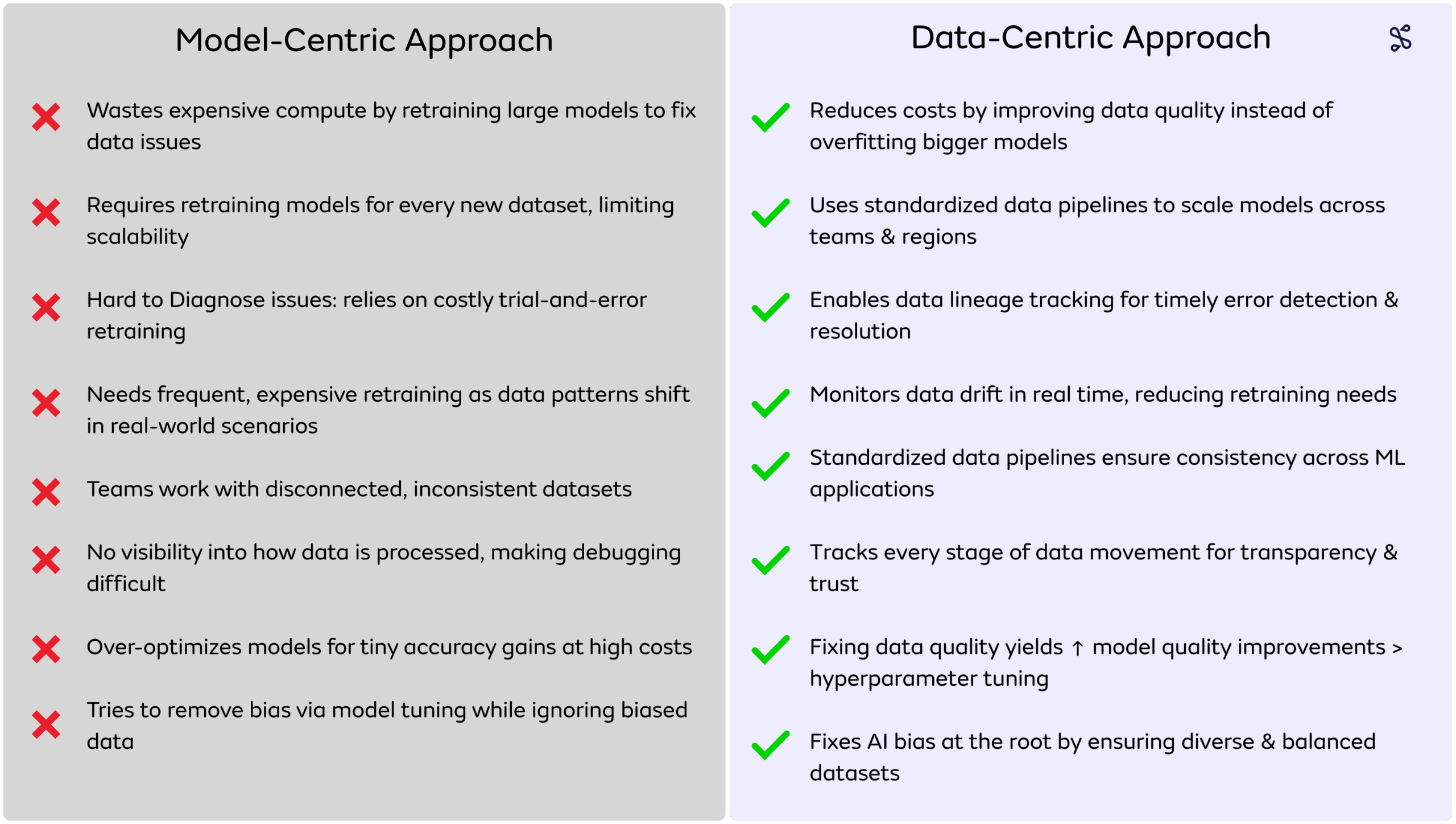

Traditionally, MLOps teams have prioritized models above all else. However, this approach often becomes costly and inefficient. Retraining massive models to fix underlying data issues doesn’t just waste compute, it delays time-to-market, drains budgets, and frustrates engineering teams. Imagine a financial services company constantly retraining their fraud detection algorithms due to changing transaction behaviors. Without clear data observability, troubleshooting becomes guesswork, expensive guesswork at that.

As well as, over-tuning models can lead to marginal accuracy improvements while overlooking fundamental data quality issues, including bias. This is the unfortunate reality many businesses unknowingly face.

The Power of Data-Centric MLOps

Enter data-centricity. Instead of obsessing over model perfection, this approach emphasizes managing the integrity, transparency, and efficient flow of data through each step of the pipeline. By prioritizing data quality and consistency, businesses gain clearer visibility, enabling quicker issue detection and significantly reducing unnecessary model retraining.

Consider a telecommunications company that struggled with AI-powered customer support automation. Before adopting a data-centric strategy, model downtime was a persistent headache. After shifting to Seldon Core 2’s data-centric framework, their observability drastically improved, cutting model downtime by 40%. Real-time data monitoring allowed them to swiftly identify and resolve issues, enhancing customer satisfaction and operational efficiency.

Core 2 Enables Data-Centric MLOps

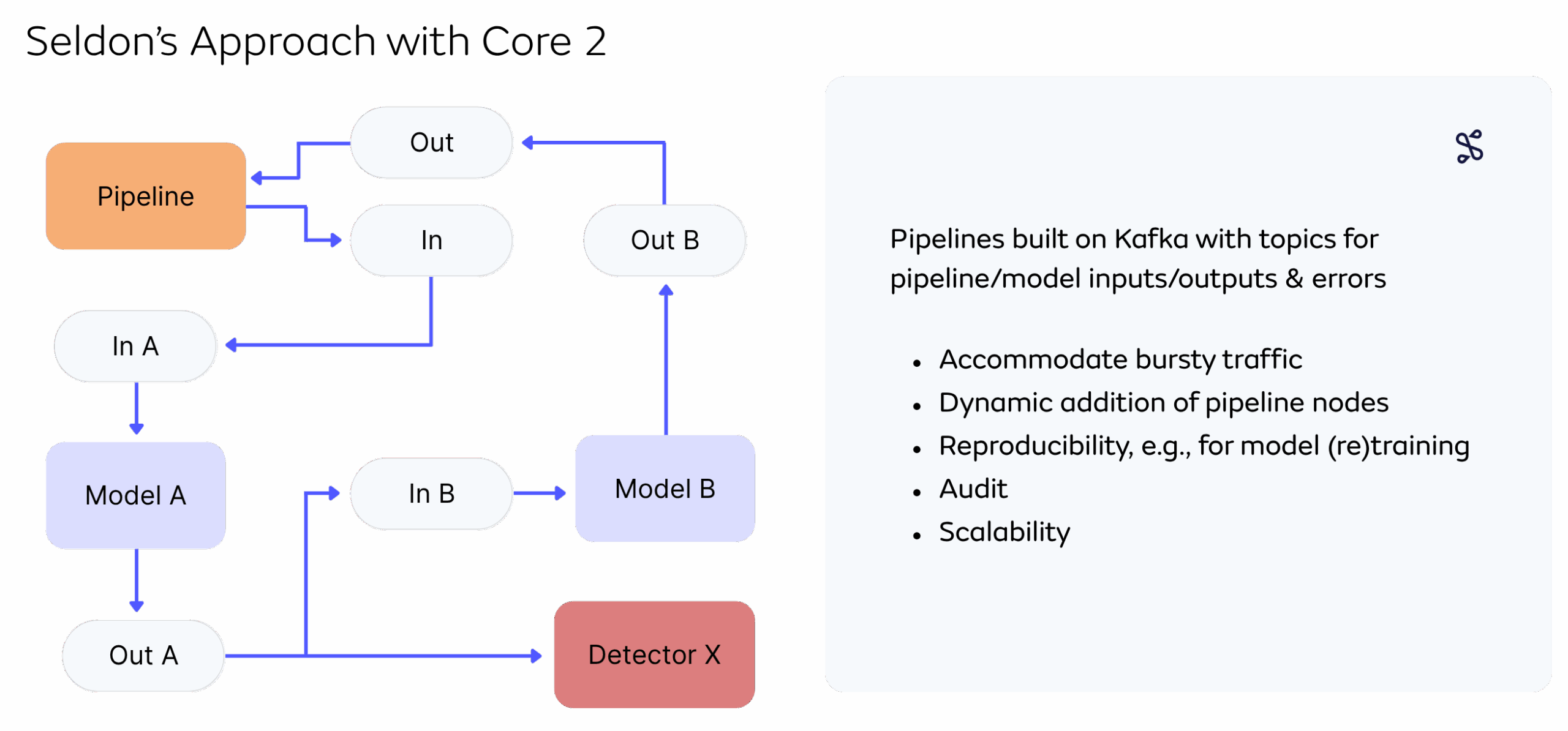

Core 2 takes data-centric MLOps from theory into practical reality. Built on modularity and real-time processing, Core 2 enables flexible, observable, and scalable AI deployments. Its Kafka-powered inference pipelines facilitate seamless real-time data flows, dynamically adjusting to changing workloads or sudden spikes.

For example, imagine a manufacturing company using predictive maintenance. Machines fitted with IoT sensors feed real-time data into Seldon’s modular pipelines. If a sensor detects unusual vibrations, the system instantly routes this data to anomaly detection components, alerting teams proactively before critical failures occur. This modular, observable structure ensures reliability and minimizes downtime, directly impacting the company’s productivity and bottom line.

Critical Questions to Ask When Comparing MLOps Platforms

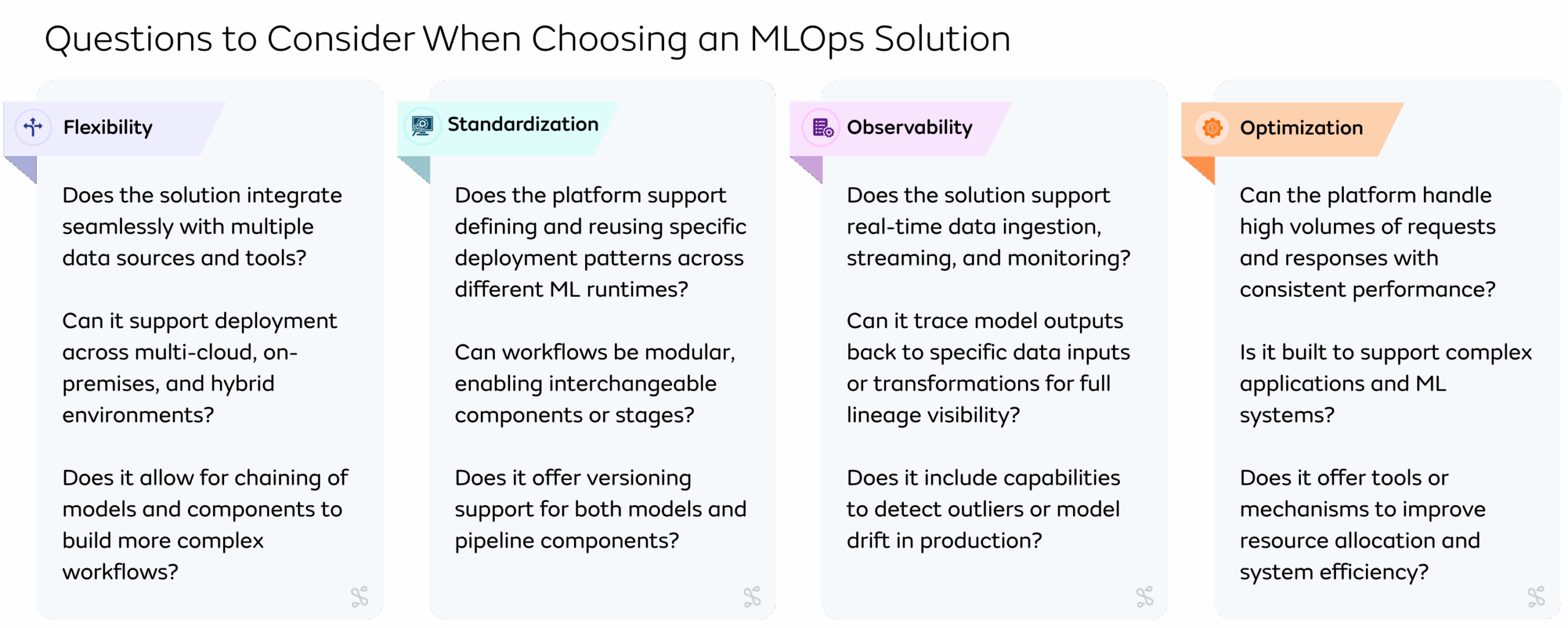

Choosing the right MLOps platform goes far beyond feature checklists. It requires a deep understanding of how the solution will perform under real-world conditions, starting with flexibility. This is key, especially in environments where teams rely on a wide array of tools, data sources, and deployment options. Your platform should be able to integrate seamlessly and adapt to diverse operational needs.

Not as exciting to discuss, but equally important is standardization. As ML systems grow in scale and complexity, the ability to define reusable workflows, manage versioning, and maintain consistency across teams and runtimes becomes essential to delivering reliable, repeatable outcomes. Prioritizing a learn once, repeat everywhere approach early will help teams move faster when innovation is on the line.

Optimization seems obvious, but mass market tooling often foregoes true optimization creating black box experiences. Finding a tool that prioritizes this ensures your solution can handle the demands of production – from managing high-throughput requests to supporting complex, multi-model architectures – all while maximizing resource efficiency and minimizing latency.

Finally, observability is what makes everything measurable and manageable. Full pipeline visibility, data lineage, and proactive detection of drift or anomalies are not just ‘nice to have,’ they’re foundational for building trust in your models and maintaining operational control.

Answering these questions will ensure your chosen solution aligns with your strategic business objectives.

The Future is Data-Centric

In today’s fast-paced, real-time-driven industries—whether financial services combating fraud, telcos improving customer experiences, or manufacturers optimizing machine health—the future is undeniably data-centric. Transitioning your MLOps strategy means embracing transparency, modularity, and observability to unlock your AI’s full potential.

Ready to transform your AI strategy?

Watch our full webinar recording to dive deeper into the data-centric revolution, or request a consultation with the Seldon team to explore tailored solutions for your organization’s unique challenges.