In November 2022, the launch of ChatGPT marked a groundbreaking milestone in AI, highlighting the need for the evolution of global AI regulations. In fact, demand for this evolving technology is so substantial that Gartner estimates more than 80% of enterprise companies will have utilized Generative AI APIs or deployed Generative AI-enabled applications by 2026.

The reasoning for this increased demand is easy to understand – Generative AI is a versatile tool with applications across various industries and roles. Its capabilities include, but are not limited to automating customer service, analyzing complex and unstructured data, and setting up internal agents (e.g. coding assistants or internal knowledge bots). By prioritizing the implementation and development of more sophisticated in-house AI systems, enterprises can expect to see fundamental changes in their operations, leading to increased efficiency, improved decision-making, and enhanced customer experiences.

The rapid adoption of Gen AI has spurred governments across the world to start preparing new regulatory frameworks that aim to balance innovation with the need for safeguards to ensure AI is safe and transparent. Until recently, governance of generative AI has largely been based on pre-existing, more generic AI regulations. However, the unique and expansive capabilities exhibited by generative AI models have brought to the fore the need for more prescriptive regulations concerning their use. Let’s explore the current state of AI regulation, as well as insights into the direction future regulations might take.

The EU AI Act

As AI technology continues to evolve, governments worldwide have proposed various regulatory frameworks, with the European Union (EU), in particular, adopting a risk-based and sector-agnostic framework in the EU AI Act.

The provisions of this act were finalized in December 2023, and are set to take effect in 2025 at the earliest. While this seems like a long time away, once enforced, violations of the AI regulations could incur penalties of up to 7% of an entity’s global annual revenue or fines ranging between €7.5 million and €35 million, depending on the severity of the breach. The EU AI Act sets out regulations defined by risk category for a given AI application, including:

- Unacceptable risk systems, which are deemed incompatible with EU values. This includes systems that can manipulate behavior to cause harm, categorize individuals biometrically, provide social scoring, or utilize real-time remote biometric identification in public spaces. Systems in this category are banned from the EU.

- High-risk systems, classified as AI applications integrated into regulated products like medical devices, vehicles, or machinery, and standalone AI systems in areas such as biometric identification, critical infrastructure management, and education. These will be subject to stringent regulatory requirements due to their potential impact on health, safety, fundamental rights, and the environment.

- Limited-risk systems, including applications like chatbots, must be transparent and inform users when they are interacting with an AI system to help minimize the risk of manipulation or deceit, and any generated content, like deep fakes, should be clearly labeled.

- Minimal-risk systems encompass all other AI applications that do not fall under the above risk categories. These systems face the fewest regulatory constraints, including spam filters or personalized content recommendation systems. While these systems are not subject to mandatory requirements under the AI Act, they are encouraged to adhere to general principles of ethical AI, including transparency, non-discrimination, and human oversight.

Regulating High-Risk Systems

Based on the EU’s framework, high-risk systems will have the most requirements, which fit broadly into two categories: auditability and risk management.

Auditability requirements relate to the need for AI systems to be transparent and accountable. Specifically, this means that providers of these systems would need to demonstrate a combination of the following capabilities:

- Record-keeping of AI system or model outputs,

- Record-keeping of changes to the system,

- Monitoring (model performance, data drift, outlier detection),

- Explainability methods.

- Human oversight, and

- Registration in an EU-wide public database.

Risk management puts the pressure on providers of AI systems to implement controls that ensure AI systems do not pose a significant threat to health, safety, and fundamental rights. Depending on the system and the risks they bring, that encompasses:

- Assurances as to the security, robustness, and availability of the system,

- Identity and access management,

- Testing mechanisms such as A/B testing, shadow deployments, canary deployments, and

- Complex logical flows (e.g. fallbacks when models fail, or business logic to alter decisions based on model outputs).

Global AI Regulations impact on generative AI and Large Language Models (LLMs)

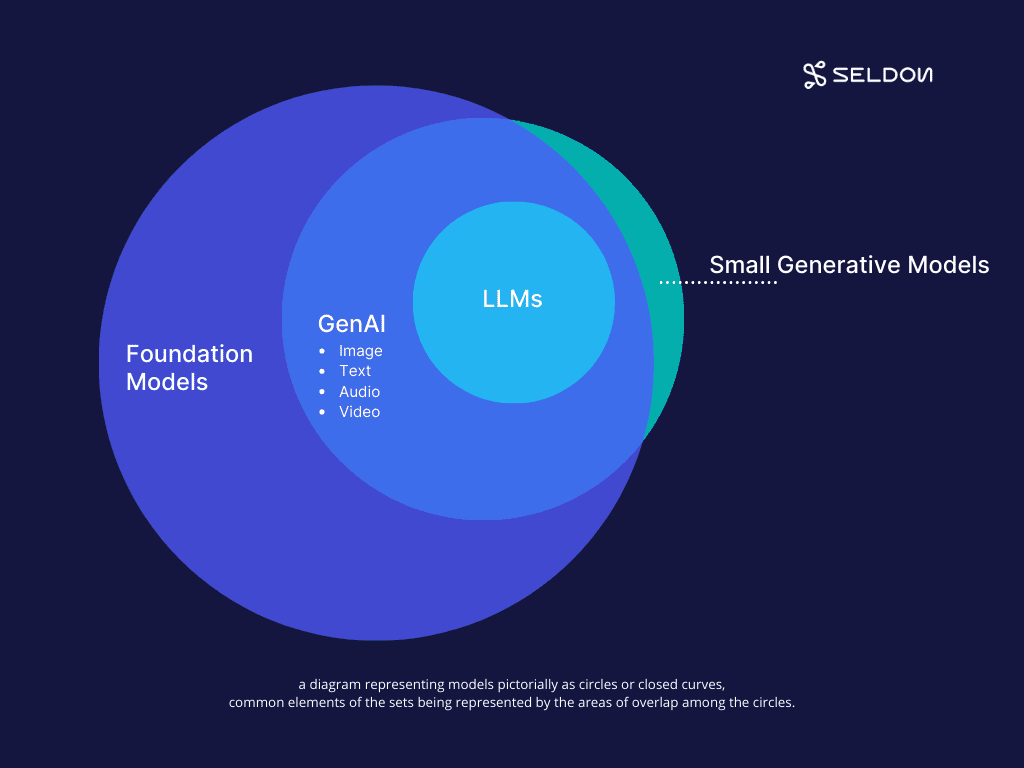

Reflecting on the rapid evolution of AI, the EU’s AI Act was expanded in May 2023 to define two new categories for gen AI systems: General Purpose AI (also referred to as foundation models, defined as AI models trained on a very broad range of sources and large amounts of data), and generative foundation models. (foundation models capable of generating content, including text, images, audio, and video).

Beyond existing risk-based regulations, these amendments mandate that all generative foundation models, irrespective of risk level, must:

- Disclose that content is AI-generated,

- Ensure design measures that prevent illegal content production, and

- Transparently share copyrighted data used in training.

Providers of generative AI models would need to demonstrate “Compliance-by-Design”, requiring gen AI models to adhere to standards that ensure safety, predictability, interpretability, corrigibility, and security, further emphasizing the necessity for auditability and risk management in all generative AI systems.

Finally, it is worth noting that although the EU AI Act applies to EU member states and aims to be used as an example to champion AI regulation at a global scale.

Regulation in the US and UK: A non-binding, decentralized approach

The US and UK are behind compared to the EU in their journey to define concrete regulation around the use of AI.

In the US, the Biden administration released an Executive Order on Artificial Intelligence in October of 2022, which outlined principles for the regulation of AI and delegated that executive agencies within the US government start developing their own sector-specific policies. These sector-specific risk assessments and associated recommendations will continue to be worked on until November 2024. In the meantime, more concrete disclosure requirements have been imposed on a federal level for developers to report vital information, especially AI safety test results, to the Department of Commerce. Individual states have also started enacting legislation mostly around consumer privacy, protecting consumers from unfair use of their data for profiling use-cases, such as incorporation of AI in hiring processes.

In the UK, the government released a white paper outlining its approach to AI regulation. Similar to the US, the development of AI regulation has been delegated to each government sector to define over the coming year.

Ensuring Global AI Regulation Compliance: Next Steps

Although regulation around the use of AI is still a work in progress, the time to start planning for current and future regulation is now. For any enterprise building products with AI, it is important to review the latest regulations that apply to the countries in which that product is developed and would be provided. Then enterprises should 1) undergo an assessment of their use-cases to assess risk, 2) understand where those use-cases land relative to the relevant regulation, and 3) develop a framework to ensure sufficient auditability and mitigation of risks across AI use-cases.

Concerning auditability, this means establishing a comprehensive audit trail system that captures and stores relevant data and system changes, implementing continuous monitoring frameworks that can track and report on model performance and data quality in real-time, adopting explainability frameworks and ensuring human oversight in critical decision-making processes.

To address risk management requirements, it’s important for enterprises to create a framework that evaluates the risks associated with the deployment of their AI, and sets out mitigating measures. This should start by:

- Implementing cybersecurity measures to protect against unauthorized access, thereby preserving the integrity of AI systems.

- Factor in time to regularly test new models and applications using various deployment strategies to help identify potential issues before full-scale deployment.

- Consider logical flows to manage unexpected outcomes or failures, ensuring AI systems possess the agility and resilience needed to operate safely and efficiently across a variety of situations.

Additionally, enterprises should focus on fostering a culture of continuous learning and adaptation, encouraging open communication across departments to share insights and best practices around AI safety and compliance.

By prioritizing the education and training of staff on AI-related risks and security measures, organizations can further enhance their preparedness for changing or new regulatory requirements.

For more information on how Seldon can support these requirements based on your use-case, join our LLM Module waitlist to speak with one of our in-house LLM experts and get a first look at Seldon’s LLM Module to be launched this Spring →